If so, how does this work with AMD’s upcoming high-end Summit Ridge CPU’s? It is widely accepted that HSA does not deliver on its promise (at least under current architecture) if there is not a tight coupling of the CPU and GPU with a shared memory allocation, and also affected by latency problems with PCIe. Hence, i can buy a £300 laptop which supports HSA, but I cannot build a PC that leverages the power of my £600 graphics card and £300 CPU. Either they sort out this limitation of shared memory pool and latency over PCIe, they stick shaders on Summit Ridge, or, HSA has no part to play in their high-end offerings.

At least that is what I used to think, but perhaps I have been looking through the wrong end of the telescope.

What if we had been looking at AMD’s attempt to bolt its GPU cores onto ARM CPU’s when instead we should have been looking for them to bolt ARM cores onto AMD GPU’s? Not so much bringing HSA to the masses – they have already done that – but bringing it to the high-end.

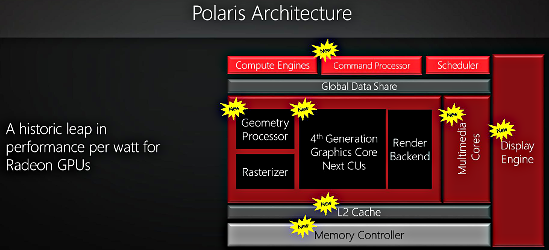

With Polaris/Vega being built on 14nm one thing they have to play with in abundance is die-space, four A72 ARM CPU cores is a drop in the ocean when you have 18 billion transistors to play with.

I have in the past posed the question thus:

If HSA is the future then we [must] presume it will one day function on their high-end/high-margin products, and not just the $300 Best-Buy boxes from which they scrape a few miserable dollars in profit.

If HSA [is] still the future of AMD then its high-end/high-margin products have two possible solutions to the current problem that i can see:

1. Summit Ridge comes with some shaders. Not many, not a significant proportion of total die space, but a useful number to enable HSA functionality. 256 high density shaders on 14nm would be very achievable with 8c/16t at 14nm inc L3 cache.

2. They find a way to extend shared memory allocation across PCIe, and look at technical solutions to reduce latency (3.0 was supposed to be better than 2.0, will 4.0 be better still?), and software solutions to mitigate the impact of that latency.

Well, as far as #1 is concerned all the rumours thus far indicate that the 8core/16thread high-end Zen CPU product will not have GPU cores bolted on.

As for #2, we cannot presume that these limitations can be just magicked away:

The HSA team at AMD analyzed the performance of Haar Face Detect, a commonly used multi-stage video analysis algorithm used to identify faces in a video stream. The team compared a CPU/GPU implementation in OpenCL™ against an HSA implementation. The HSA version seamlessly shares data between CPU and GPU, without memory copies or cache flushes because it assigns each part of the workload to the most appropriate processor with minimal dispatch overhead. The net result was a 2.3x relative performance gain at a 2.4x reduced power level*. This level of performance is not possible using only multicore CPU, only GPU, or even combined CPU and GPU with today’s driver model. Just as important, it is done using simple extensions to C++, not a totally different programming model.

So I propose #3 instead:

3. Polaris/Vega comes with four high performance ARM CPU cores embedded in the new “Command Processor” section of the GPU. The idea being that HSA tasks – which are architecture independent anyway – are passed across the PCIe bus from the CPU to the GPU, whereupon the GPU (with its onboard CPU’s), takes over the task in its entirety with the benefit of 8GB of ‘unified’ memory and 1000GB/s of bandwidth to supply the many thousands of GCN1.4 shaders.

AMD is like a hamster that is never allowed to get off its wheel if it wants to survive in a world dominated by Intel and Nvidia. Year after year, it must run and run. It can’t do that scraping a percentage margin on Best Buy bargain laptops, it needs to make some serious profit and that requires high-value / high-margin products.

But perhaps the answer is not to worry about the lack of HSA on Zen, or, to look quizzically at its ARM based server products. Perhaps, we should wait and see whether that Polaris/Vega Command Processor is hiding a bunch of ARM cores.

Update 20.05.2015 – good find by blueisviolet – https://twitter.com/blueisviolet/status/732194265577619458/photo/1 2GHz out-of-order ‘aggregator’ processor

An unexpected approach often helps to clarify the argument. But is the approach useful?

The Heterogeneous System Architecture’s advantage is in allowing multiple compute engines to share common memory. The CE’s can operate essentially independently, or they can pass a job back and forth so each CE can do what it does best at the time.

ARM and dGPU could share VRAM for local HSA. But they can only work on the data they have. Their only access to main memory is by PCIe. And if that was practical for random memory access, we would not need VRAM.

An HSA program might start on the CPU for branching, then move to the GPU for big data (or parallel simulation), then back to CPU, all while working on the exact same data items in common memory. This is the efficiency of using the appropriate CE for each part of the job, plus the lack of a need to copy data back and forth.

For HSA to be practical, CE’s need similar access to common memory. When a GPU is functioning as a CPU and thus accessing data at random, it needs CPU-like data access times. Those are not available over PCIe.

For dynamically moving between CE’s to be useful, shifting from one CE to another must be faster than computing the same task locally. Supposedly, AMD Carrizo implements unified hardware micro-task queueing and dispatch for both CPU and GPU at modest overhead.

thank you for the detailed reply.

do you believe there is merit in the notion of short-cutting the latency and bandwidth limitations of PCIe by shifting the cpu component onto the gpu?

No. I do not see it. Having a CPU on a GPU would be having APU-like peripherals on a main system. But while the individual APU’s could do their own local “HSA,” the benefits we expect from HSA come from shared common memory.

It is the shared memory which allows different processors to dig into the problem with their own contribution. It is shared memory which avoids copying. It is shared memory which allows the use of a single data structure to represent the problem, instead of selected, copied and generally unrelated parts.

The HSA advantage is low-latency CPU-class memory access to the same common memory from multiple compute units. PCIe cannot do that.

Hi again, thanks for the detailed reply.

Do you see a future for HSA on the high-end desktop such as 8c/16t Zen, as well as 4096 shader GPU’s such as Vega?

If not, should this matter?

HSA offers major advantages, but only when used. Just putting HSA-capabilities on the desktop will not, in itself, do much. But it would provide a better platform for HSA development, which cannot be bad.

HSA means APU. Actual HSA advantages require low-latency shared memory.

Zen + Vega + HBM sounds good, since we have almost nothing now beyond Kavari and laptop Carrizo. We may not see Zen APU’s for a year or more, but it would be a real kick to see them earlier.